Inside Bias in A.I.

By: Jennifer Herseim | Categories: Alumni Achievements

Artificial Intelligence

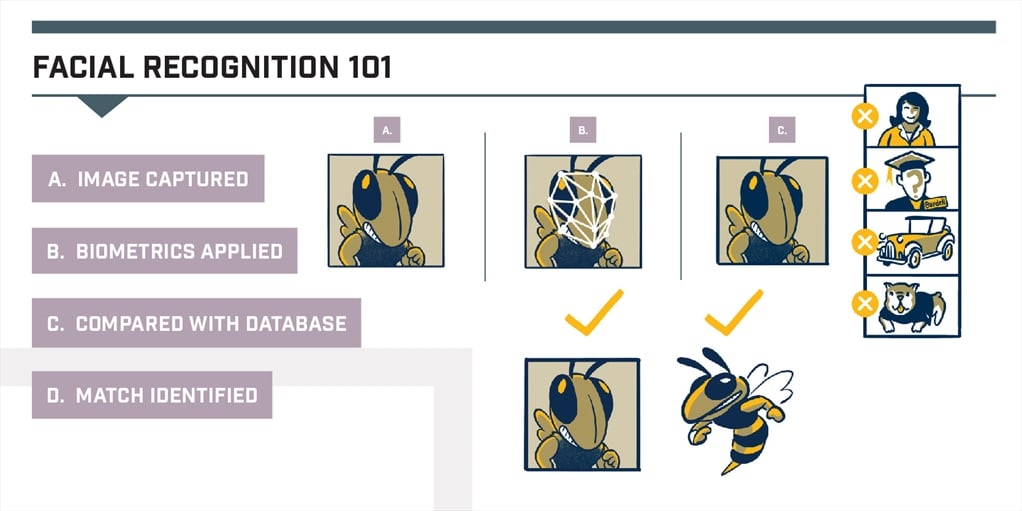

Artificial Intelligence is increasingly part of our lives. Facial recognition unlocks our phones. Online advertisements learn our shopping behaviors. Recommendation algorithms even suggest the next romantic comedy we should binge-watch after Bridgerton.Despite its name, we don’t expect AI to be intelligent all the time. But we generally expect it to be equally correct or incorrect for all of us—regardless of gender or race.

Georgia Tech alumni and researchers are finding that’s not always the case.

“No one wakes up and says they’re going to write an algorithm that discriminates against a certain group of people. The problem is much deeper than that,” says Swati Gupta, a Fouts Family Early Career professor and assistant professor in the H. Milton Stewart School of Industrial and Systems Engineering at Georgia Tech. Gupta’s research includes algorithmic fairness and its impact on hiring practices.

In short, the apple doesn’t fall far from the tree. As humans, we can inadvertently leave traces of ourselves in the technology that we develop. As the world finds more uses for data-centered technologies, the consequences of this bias can be significant and be disproportionately felt by certain groups.

Those in the Georgia Tech community are fighting this issue from all sides, illuminating bias in AI, driving the conversation around algorithmic fairness, and working toward a future where AI can help us rise above human flaws.

“I’ve been hammered with this perception that technology is perpetuating our biases. I would counter that humans are biased,” Gupta says. “With algorithms, we have the opportunity to make them less biased. We can find these unfortunate trends in the data and correct them.”

Illuminating Bias

When Joy Buolamwini, CS 12, started school at MIT, she noticed something strange with the facial tracking software she was given: it wouldn’t detect her face until she put on a white mask. In a documentary released last year called Coded Bias, Buolamwini reflects on that moment. “I’m thinking all right, what’s going on here? Is it the lighting conditions? Is it the angle at which I’m looking at the camera? Or is there something more?” she asks. “That’s when I started looking into issues of bias that can creep into technology.”Buolamwini would go on to co-author the study Gender Shades, which illuminated bias in facial classification systems. Her TED Talk on algorithmic bias has garnered more than 1 million views, and she was recently recognized for her work by National Geographic as an Emerging Explorer in 2020. Buolamwini founded the Algorithmic Justice League to use art and research to bring awareness to issues of bias in AI.

Gender Shades looked at the accuracy of three facial classification systems that detect a person’s gender. When results were disaggregated by gender and skin tone, the study revealed that the systems performed better on male rather than female faces, and that error rates were much higher for darker-skinned females (ranging from 20.8% to 34.7%) compared to lighter-skinned males (ranging from 0.0% to 0.8%). Buolamwini and her co-author found that data used to train these algorithms was largely made up of male and lighter-skinned faces.

The impact of bias in facial recognition has already be¬gun to lead to real-world consequences. In August of 2020, a Black man was arrested by the Detroit Police Department after a facial identification system incorrectly matched the man’s face from surveillance footage.

In addition, there are other examples of law enforcement using systems where algorithmic bias can lead to problems, Gupta adds. The Los Angeles Police Department previously used a predictive policing tool that used arrest data to predict where crimes would happen in the future. “The problem was the data looked at where arrests occurred, not where the crimes actually occurred,” Gupta says. Research has shown how that could lead to false assumptions about where crime is happening and raise questions about over policing certain neighborhoods, she says.

Where Bias Creeps In

To understand how bias can creep into AI, consider how these systems are developed. “All AI is, is a system that’s finding patterns in data and is able to infer things in the future, or ‘the wild’, based on patterns that it previously has seen,” explains Nashlie Sephus, MS ECE 10, PhD ECE 14.Sephus is a Tech Evangelist at Amazon AWS AI, where she focuses on fairness, mitigating bias, and understanding how technology impacts communities.

She joined Amazon in 2017 after the company acquired Partpic, where Sephus was chief technology officer. Partpic helped customers identify screws, bolts, and other hardware parts using computer vision. “Whether it’s screws, nuts, washers—or faces or speech—you have to train these models to be able to infer new data that comes at them,” Sephus says. When the training data sets are not representative, the system won’t be able to properly identify new data. Simplified: “Garbage in, garbage out,” Sephus says.

Although training a system to identify hardware is fundamentally the same as training it to recognize faces, the risks of a wrong answer are, obviously, much greater depending on the application. When a system doesn’t recognize your face to unlock your phone, that’s an inconvenience, but when it’s used by law enforcement to make an arrest, a wrong match could have significant consequences. Last June, Amazon announced a one-year moratorium on police use of their facial recognition technology.

“We’re at a place where technology has moved so fast, government and policies have not been able to keep up,” Sephus says. “We need to take a step back to gather stakeholders to decide what needs to happen before we can use these technologies in our everyday lives.”

Bias is more than just a data problem, Sephus adds. “You have to consider the end-use, and then adapt, modify, and be sensitive about the risks that can occur.”\

Annotation bias and confounding variables can also lead to problems. Consider the example of facial gender classification systems. A system could incorrectly identify women, for instance, because it was created with a narrow set of variables that did not account for women who have short hair. Beyond improving the accuracy of such a system, this application raises other questions, Sephus says, such as “Should we even be doing gender detection if we can only do it visually in terms of binary gender? Does that discriminate against transgender and nonbinary groups?”

Whether to use AI in certain applications is a valid question that programmers should be asking, Gupta says. Even if a program has 99% accuracy, is the application ethical? Do we want a society based on this program?

As an example, Gupta came across a study of a technology that uses a person’s photo to predict whether they would commit a felony. “Even if you showed a high accuracy, maybe it’s off on certain demographics and it completely changes people’s lives. I think there’s a difference between when we should deploy a technology and when we should not.”

Building a Better AI

Programmers can help policymakers and others understand where bias exists to limit its harm. Gupta believes transparency is key. “If you know that the program has higher error rates for certain groups, make that information transparent so that users can put in place additional safeguards,” she says.Programmers can also act as portfolio managers by showing how different decisions result in different outcomes, Gupta says. With more thought, Gupta believes that algorithms can lead to less biased decision-making because, unlike humans, algorithms can be audited. “My hope is that with algorithms we can achieve a greater consistency in decisions, create more fairness, and mitigate some of these biases,” she says.

Change can also come from having more representation in development and decision-making so problems can be spotted before a technology is deployed. Sephus, who has worked in South Korea and France, at startups and large corporations, says she’s usually the only person in the room who looks like her—a Black woman from the South with a PhD in computer and electrical engineering.

“I used to think of it as a disadvantage, but now I look at it as an opportunity to expose somebody to something different,” Sephus says. She recognizes the opportunity she has to create change from inside a corporation like Amazon. “I’m encouraged to go to work every day because I know that I’m at this huge corporation that has so much impact and I can be an influence from the inside,” she says.

Sephus is also working outside Amazon to expand opportunities for others in technology. She founded The Bean Path, a nonprofit in her hometown of Jackson, Miss., to bring technologists to libraries to offer free technical advice and guidance. The project has since snowballed, and Sephus recently purchased space in downtown Jackson that The Bean Path and partners plan to turn into a 14-acre multi-use tech hub. And in Atlanta, Sephus has joined seven other Black technologists, many of whom are Tech alumni, to establish a co-development studio called KITTLabs, located a few blocks from Tech’s main campus.

“It’s about strengthening the community,” she says. “Especially in terms of fairness and biases in technology, we want the most diverse future of technology that we can get.”